A subsequent step is that, per test type and based on the chosen depth of testing, one or more suitable test techniques are

selected with which the test is to be specified and executed. If a object part is divided into test units, techniques are

allocated per test unit. But how, then, do you select the suitable techniques?Variations can be made on these, and there

are other techniques, including those you create yourself. Checklists, too, can be used as a technique. This choice is,

besides the choice of depth of testing, strongly dependent on a number of other aspects:

-

Test basis - Are the tests to be based on requirements; is the functional design written in pseudo-code or easily

converted to it; are there state-transition diagrams or decision tables, or is it very informal with a lot of

knowledge residing in the heads of the domain experts? Some techniques rely heavily on the availability of a

certain form of described test basis, while with others the test basis may be an unstructured and poorly documented

collection of information sources.

-

Test type / quality characteristics - What is to be tested? Some test design techniques are mainly suitable for

testing the interaction (screens, reports, online) between system and user; others are more suitable for testing

the relationship between the administrative organisation and the system, for testing performance or security, or

for testing complex processing (calculations), and yet others are intended for testing the integration between

functions and/ or data. Checklists are also often used for testing non-functional quality characteristics. All of

these relate to the type of defects that can be found with the aid of the technique, e.g. incorrect

input checks, incorrect processing or integration errors.

-

What kind of variations should be covered, and to what degree? What depth of testing is required? This should be

expressed in the definition of one or more forms of coverage and associated basic techniques. See Coverage Types And Basic Techniques.

-

Knowledge and expertise of the available testers - Have the testers already been trained in the technique; are they

experienced in it, or does the choice of a particular technique mean that the testers need to be trained and

coached in it? Is the technique really suitable for the available testers? Users are normally not professional

testers.

-

Labour-intensiveness - How labour-intensive are the selected techniques, and is this in proportion to the estimated

amount of time? Sometimes other techniques should be chosen, with possibly different coverage, to remain within

budget. If this means less thorough testing is to be carried out than was agreed, the client should, of course, be

informed!

After covering the above aspects, the test manager makes a selection of techniques to be used. An example is set out

below:

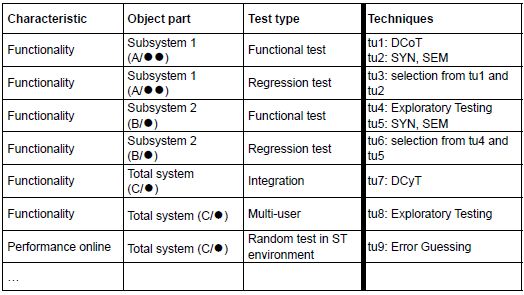

ST example:

In this example, the testing of subsystem 1 is spread across test units (“tu”) 1 and 2; subsystem 2 consists of test

units 4 and 5. Test unit 1, with many complex calculations, is nevertheless given rather an light technique, with the

Data Combination Test (because the client opted for an average depth of testing), test unit 4 contains processing

functionality and is allocated the (very free) “technique” of Exploratory Testing; test units 2 and 5 consist mainly of

screens and are each given 2 techniques: the Syntactic and Semantic Test. The total system is then tested for coherence

with the Data Cycle Test (test unit 7) and the multi-user aspect with Exploratory Testing (test unit 8). Later

regression tests consist of a selection of previously created test cases (test units 3 and 6). Finally, a light

Performance test is carried out using Error Guessing (test unit 9).

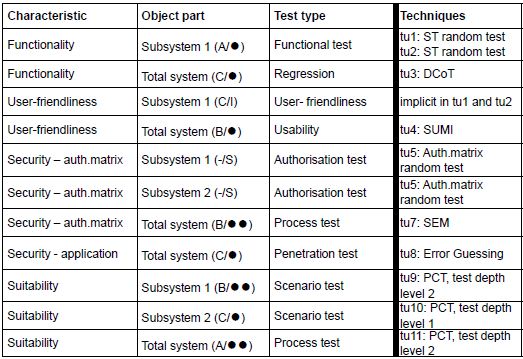

UAT example:

In this example, use is made in test units 1 and 2 of ST test cases. The regression test on the total system takes

place with the light Data Combination Test. User-friendliness is implicitly tested simultaneously with test units 1 and

2 by evaluating the testers’ impressions after completion. Thereafter, an explicit test takes place with the aid of the

SUMI checklist (see Usability Test). The authorisation matrix is fi rst randomly checked for correct

input, and then the authorisations are dynamically checked using the Semantic Test. A light penetration test takes

place using Error Guessing, and Suitability is tested using the Process Cycle Test.

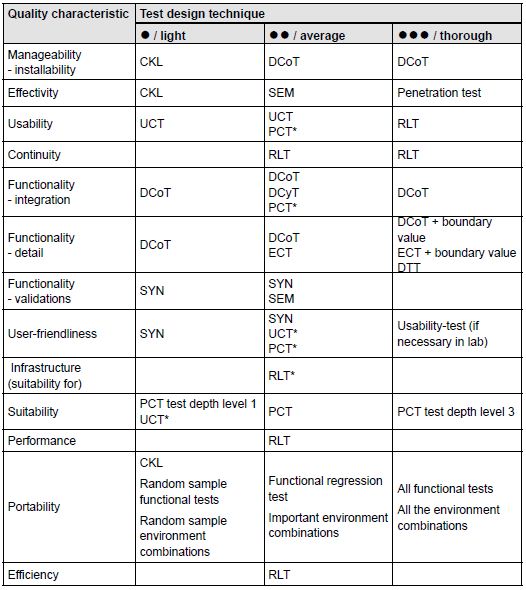

If the decision has been made to perform dynamic explicit testing, the table below can provide assistance in selecting

the test design techniques to be employed. Per quality characteristic, the table provides various test design

techniques that are suitable for testing the relevant characteristic. This table can also be found at www.tmap.net.

For the relevant quality characteristic, usable test design techniques are mentioned, making a distinction in respect

of the thoroughness of the test. ● means light, ●● average, and ●●● thorough. The techniques mentioned should be seen

as obvious choices and are intended to provide inspiration. The table is certainly not meant to be prescriptive – other

choices of techniques are of course allowed.

Notes on the above table:

Abbreviations used:

-

* - If the technique is adapted to some extent, this can be used to test the relevant quality characteristic

-

DTT - Decision table test

-

CKL - Checklist

-

DCoT - Data combination test

-

DCyT - Data cycle test

-

ECT - Elementary comparison test

-

PCT - Process cycle test (test depth level = 2)

-

RLT - Real-life test

-

SEM - Semantic test

-

SYN - Syntactic test

-

UCT - Use case test

For a comprehensive description of these techniques, please refer to Test Design Techniques.

Concepts used:

-

Environment combinations - In portability testing, it is examined whether the system will run in various

environments. Environments can be made up of various things, such as hardware platform, database system, network,

browser and operating system. If the system is required to run on 3 (versions of) operating systems, under 4

browsers (or browser versions), this runs to 3 x 4 = 12 environment combinations to be tested.

-

Penetration test - The penetration test is aimed at finding holes in the security of the system. This test is

usually carried out by an ‘ethical hacker’.

-

Portability – functional tests - In order to test portability, testing random samples of the functional tests – in

increasing depth – can be carried out in a particular environment, the regression test or all the test cases.

-

Usability test - A test in which the users can simulate business processes and try out the system. By observing the

users during the test, conclusions can be drawn concerning the quality of the test object. A specially arranged and

controlled environment that includes video cameras and a room with two-way mirror for the observers is known as a

usability lab.

See also Test Types.

|